A great scope is more than just sharp glass. I surveyed 700+ shooters, and mechanical performance was rated as the most important feature of a scope. In fact, mechanical performance received 30% more votes than optical performance. A scope that doesn’t track, or doesn’t have repeatable adjustments seems to be viewed as the biggest flaw a scope could have.

Mechanical performance is a critically important topic, but has been largely neglected in the shooting press. Here is what Dennis Sammut, Founder/President of Horus Vision, has to say on the subject:

“Yearly, a virtual mountain of written information is spewed forth from the word processor of gun writers. … When the subject is “riflescopes,” the writer’s primary focus is on external looks, dimensions, weight, reticle, image resolution, power range, and similar physical characteristics. It is impossible to find an article that evaluates a particular riflescope or runs a test on a group of a riflescope’s ability to accurately respond to elevation and windage knob adjustments.”

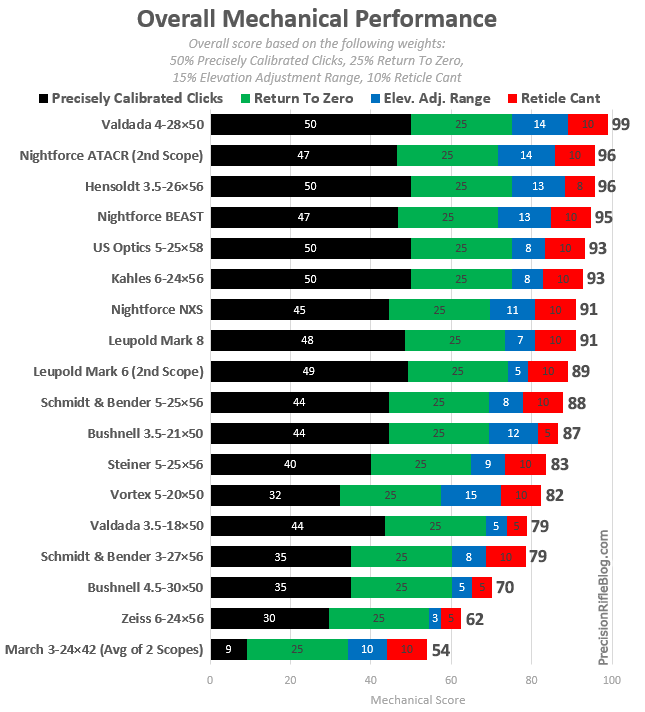

That was true until now. I conducted several objective tests focused specifically on mechanical performance. This post provides the overall summary and scores for each scope related to mechanical performance, and explains what those scores are based on. Mechanical Performance Part 1 reviewed the test and results that evaluated how precisely calibrated the mechanical clicks were on each scope. Part 2 reviewed several other tests related to mechanical performance, including return to zero, reticle cant, reticle calibration, elevation adjustment range, elevation travel per revolution, windage adjustment, and the live-fire, magnum box test.

Before anyone throws a fit about this not being the “right” breakdown … remember, I’ve published the details for every single piece of data this is based on, so feel free to calculate your own score based on whatever factors you’d like. I believe this is a good general breakdown, so I wanted to accommodate the guys that just want a higher-level overview without having to read the details of every test.

Here is a breakdown of what all played into the overall mechanical performance score:

Overall Mechanical Performance Score

- 50% Precisely Calibrated Clicks – This score indicates how well the advertised click adjustments matched the actual measured adjustment. For example, when you dial for 10.0 mils of adjustment, does that equate to exactly 10.0 mils … or is it 9.9 mils or 10.1 mils? Most turrets don’t track perfectly, even on these high-end scopes. In fact, I only found 4 of these scopes that tracked perfectly all the way to 20 mils of adjustment. I provide a detailed breakdown of how this element was calculated later in this post.

- 25% Return To Zero – This indicates the scopes ability to repeatedly return to zero through multiple elevation adjustments. All scopes in this test were able to do this perfectly. However, I’m trying to establish a benchmark scoring system, because I may test mid-priced scopes in the future. This is an important element that not all scopes will be able to handle as well as these did, so I wanted to keep it as part of the mechanical score, even though all of these scopes received full credit. (Read how I tested this)

- 15% Max Elevation Adjustment Range – This is also referred to as a scope’s “Internal Adjustment Range” or “Overall Elevation Travel.” It’s simply the maximum amount of much elevation adjustment the scope allows. I measured this directly. For long-range shooters, more is better. It’s frustrating to be limited by the travel built into your scope. For this score, a scope with 40 mils of adjustment or more received full credit, because that should be more than enough for 99.9% of shooters out there (it is enough adjustment for a 338 Lapua to reach beyond 2500 yards). A scope with 10 mils or adjustment or less received no credit. (Read how I measured this)

- 10% Reticle Cant – Some of these scopes had a measurable amount of cant in their reticle, which is when the crosshairs don’t perfectly align with the directions of the elevation and windage adjustments. I explain this more in Part 2, and include diagrams to help you visualize what I mean. In that post, I also look at what that means to your trajectory at long range, and that’s how I landed on the scoring where 2% or more reticle cant was unacceptable and the scope would receive no credit at that point. Obviously, if there was no measurable cant, the scope received full credit. (Read how I measured this)

So without further ado, here are the results for the overall mechanical performance score, weighted as described above:

I realize these results may not align with popular opinion within the shooting community, but I’d highly encourage you to go read how I conducted the tests and collected the data before you dismiss this. And, it may be fitting to repeat the disclaimer that I’m not affiliated with or sponsored by any of these manufacturers. Although a few have offered to pay to sponsor this website, I’ve declined every offer to date so that I can remain independent and don’t have to feel like I have to pull punches if I discover flaws in their products. I’ve definitely put a ton of effort into evaluating and presenting this information the most honest and unbiased way possible.

While several scopes performed very well, only 4 scopes were PERFECTLY calibrated all the way through 20 mils of adjustment:

Since that was such a big part of the score, you can see that all of those scopes ended up towards the top of the list. The Nightforce ATACR 5-25×56 and Nightforce BEAST 5-25×56 were also notable finishers in terms of mechanical performance, and their extremely generous elevation travel allowed them to end up among the other 4 scopes I itemized above. The Leupold Mark 6 3-18×44 and Leupold Mark 8 3.5-25×56 scopes also performed very well, with both finishing in the top half.

You might notice that the Leupold Mark 6, and Nightforce ATACR both have “2nd scope” by their label, and the March Tactical 3-24×42 FFP scope has “Average of 2 scopes.” The first time I ran through the mechanical tests, those 3 test scopes showed more error than others in the test. I thought the results might be a result of a defective unit, so I contacted each manufacturer. First, I completely understand that it is impossible (and impractical) for every scope to be perfect, so I always want to give a manufacturer a chance to fix something like that before I publish results that may not be representative of the typical unit. At the same time, I’m committed to being completely transparent and honest with my readers. So if I run into something like this, I give the manufacturer a shot at fixing it, and then in the article I mention the issues I ran into and how it worked out in the end. That seems like the most respectful and fair approach for both the manufacturers and readers.

So Leupold, March, and Nightforce were all kind enough to send me another test scope (I didn’t have time to wait on the units I had to be repaired, since this project was already running behind schedule). When I retested the new Leupold Mark 6 and the Nightforce ATACR scopes, they both performed considerably better than the original scopes. The first Leupold Mark 6 had an average of 3.7% of error in the elevation adjustment through 20 mils, while the replacement performed stunningly with an average of just 0.1% of error. The Nightforce ATACR followed suit with the original coming in with an average of 1.8% of error in the elevation adjustment through 20 mils, and the replacement coming in with 0.4% of error. In both of those cases, I feel like it was an issue with the particular scope and the original results were not indicative of what you can reasonably expect from Leupold or Nightforce. Either of those companies would quickly repair any scopes that performed like the original set of scopes I tested. So in both cases, I’ve only included how the second scope performed in the charts and scoring.

However, the replacement March scope that Kelbly.com sent unfortunately didn’t follow that same pattern. While the 2nd March scope performed similar to the original, it was actually slightly worse overall. The original scope had an average of 2.2% of error in the elevation adjustment through 20 mils, and the replacement had an average of 2.7% of error. I really didn’t know exactly what to do with those results, because with similar results this didn’t seem to simply be due to a defective unit like the Leupold and Nightforce scopes. I decided the best approach was to simply average the results from both scopes and publish that as my results for the March scope.

Scoring for Precisely Calibrated Clicks

I went into exhaustive detail regarding how I performed these tests, and the data I collected in Part 1 of the mechanical performance, so I won’t repeat that info here. I essentially tested how well the elevation turret adjustment aligned with what was advertised and indexed on the scope. I used Horus calibration targets and Spuhr scope mounts, and was extremely careful about how I performed the tests. Here is a quick image that shows the basic setup, but please check out Part 1 for more details.

I checked each scope at 4 different adjustments: 5 mils, 10 mils, 15 mils, and 20 mils. Most of the scopes used mils, but a few used MOA adjustments, and the Zeiss scope actually used Shooter’s MOA (which is slightly different). I also tested those scopes at 4 similar adjustment intervals.

Although testing up to 20 mils of adjustment may seem extreme, any error in the turret typically has a compounding effect, so even a small amount of error becomes measurable at that extreme adjustment. But for the scoring, I weighted error found at 5 mils the heaviest, with decreasing weight for each increment up to 20 mils. I did this because most shooters live in those lower adjustments, so they’re weighted more heavily. The 5 mil adjustment represents 40% of the score, 10 mil adjustment is 30%, 15 mil adjustment is 20%, and the 20 mil adjustment is 10% of the score.

Then, I had to decide how much a certain amount of error should penalize the score. In Part 1, I walked through a few examples that showed how much 1-2% error equates to at long-range. I decided 3% of error would make it very tough to account for and get shots on target, so if 3% of error or more was found the scope would not receive credit for that adjustment. If the scope was dead on (i.e. 5.0 mils of adjustment on the scope equates to exactly 5.0 mils of adjustment at 100 yards), then it received full credit.

![]()

So here is the scores for Precisely Calibrated Clicks, according to the weights and scoring technique outlined above. For more details or to see the underlying data, check out Mechanical Performance Part 1.

*The Zeiss Victory Diavari 6-24×56 scope actually didn’t have enough elevation travel to get to the 4th adjustment (72” at 100 yards), so it didn’t receive credit for that one (but that only makes up 10% of the overall score). The Zeiss Victory scope was the only scope in this class that didn’t provide at least that much adjustment. The average among the other scopes was 30 mils, but the Zeiss had the equivalent of 16 mils. See Part 2 for a direct, visual comparison of all the scope’s elevation travel.

You can clearly see the 4 scopes that were perfect through all four adjustments at the top of the chart. 12 scopes were dead on at 5 mils, and 7 were dead on at 10 mils. I measured this down to 1/2 click granularity.

Many shooters don’t need to adjust beyond 10 mils, because that is enough adjustment to take most modern cartridges to 1,000 yards. Here is a little larger list with scopes that performed perfectly up to at least 10 mils:

- Hensoldt ZF 3.5-26×56

- Kahles K 6-24×56

- Leupold Mark 6 3-18×44

- Leupold Mark 8 3.5-25×56

- Nightforce ATACR 5-25×56

- US Optics ER25 5-25×58

- Valdada IOR RECON Tactical 4-28×50

And here are few scopes that were very close to perfect up to 10 mils. The scopes below weren’t off by more than a 1/2 click at either 5 mils or 10 mil adjustments, which is still great performance and more than adequate for most shooters:

- Bushnell Elite Tactical 3.5-21×50

- Nightforce BEAST 5-25×56

- Nightforce NXS 5.5-22×50

- Schmidt and Bender PMII 5-25×56

- Valdada IOR 3.5-18×50

- Steiner Military 5-25×56

Other Post in this Series

This is just one of a whole series of posts related to this high-end tactical scope field test. Here are links to the others:

- Field Test Overview & Rifle Scope Line-Up Overview of how I came up with the tests, what scopes were included, and where each scope came from.

- Optical Performance Results

- Summary & Part 1: Provides summary and overall score for optical performance. Explain optical clarity was measured (i.e. image quality), and provides detailed results for those tests.

- Part 2: Covers detailed results for measured field of view, max magnification, and zoom ratio.

- Ergonomics & Experience Behind the Scope

- Part 1: Side-by-side comparisons on topics like weight, size, eye relief, and how easy turrets are to use and read

- Part 2 & Part 3: Goes through each scope highlighting the unique features, provides a demo video from the shooter’s perspective, and includes a photo gallery with shots from every angle.

- Summary: Provides overall scores related to ergonomics and explains what those are based on.

- Advanced Features

- Reticles: See every tactical reticle offered on each scope.

- Misc Features: Covers features like illumination, focal plane, zero stop, locking turrets, MTC, mil-spec anodozing, one-piece tubes

- Warranty & Where They’re Made: Shows where each scope is made, and covers the details of the warranty terms and where the work is performed.

- Summary: Overall scores related to advanced features and how those were calculated.

- Mechanical Performance

- Summary & Overall Scores: Provides summary and overall score for entire field test.

PrecisionRifleBlog.com A DATA-DRIVEN Approach to Precision Rifles, Optics & Gear

PrecisionRifleBlog.com A DATA-DRIVEN Approach to Precision Rifles, Optics & Gear

Thanks Cal for your ongoing good work in what is a difficult and subjective (from the consumer perspective) field.

The conversation about what is a “standard” MIL has set me off on an adventure around the Western militaries to see what they use. UK and Australian Forces use the 6400 scale – noting that it is easier to calculate on this figure. 6400 is also the NATO standard so it seems the US approach is less than unified on this issue.

Technology has also changed markedly from the 1960s and 70s when wire was used as a reticle instead of the laser etching used today.

The other issue that has come up from these global conversations is “relative tolerance”. With 1 MIL subtending 1cm at 100m, there are very few shooters who could shoot to that tolerance from a bipod. (Note that the best a sling shooter can expect is 1 MOA).

What does this mean for scope performance? I must admit that I am in favour of tuning and checking everything – so the load works at 100m, the scope works and you gather and record shot data out to your longest possible distance. And you practice because with the quality scopes available today, they will show you where the errors in your shooting lay.

Hey Richard, this has actually set me off on an adventure as well. I hope to do a more in-depth post on this after I wrap up the field test results. I’ve asked each manufacturer a few questions directly, and have also asked several industry experts. I should have a pretty wide view on this topic. Thanks for the comments!

Don’t the USA and USMC use different values for a mil ?

Great question. There is a ton of confusion about this. Here is a quote on this subject from A Shooters Guide to Understanding MILS and MOA by By Robert J. Simeone:

I don’t know the author of that personally, and can’t find much info about him … but Frank from Sniper’s Hide recently made a comment around this topic that seemed really helpful and lines up with the quote above:

I think Frank owns just about every scope ever created and he was also a sniper in the US military, so I’m going with his experience here. There is certainly a lot of confusion and misinformation around this topic, but it sounds like 1/6283 is used by all US military and also in the consumer market in the US as well. There may be a few outliers worldwide that use the NATO standard, but its rare in the USA.

You wanted to say 0.1 mil is 1 cm at 100m

Yes – 0.1 Mil subtending etc.

Hi Richard,

One observation: I think you are mistaken when you say that 1MIL is 1cm at 100m. From what I knew off the top of my head, 1MIL is 10cm at 100m, which means roughly 3MOA, and a quick search confirms it: “1 trigonometric milliradian (mrad) ≈ 3.43774677078493 MOA. 1 NATO mil = 3.375 MOA (exactly)” (from: https://en.wikipedia.org/wiki/Angular_mil#Use).

Cal,

I just wanted to congratulate you on a fine job. You provided the data necessary to repeat everything (if necessary) and I have no doubt that this is the single finest head to head test of these scopes ever performed. Data driven is the right approach and you didn’t disappoint in this difficult field. You have a life-long reader, don’t disappear!

Now I can’t wait to see what is next! I would love to see you do something similar for factory ammo, ranging scopes, and muzzle breaks/suppressors. I am subscribed and I’ll keep coming back. Thanks!

Thanks for the encouragement, Nick. I’m glad you found the info & approach helpful. I definitely have some ideas for other field tests I’d like to do in the future. It might take me a couple months to recover from this one, because it was a ridiculous amount of time and energy … but I’m sure I’ll start on another one at some point.

Again, great data driven approach to this test. Everyone has certain features that are on the top of there list when scope shopping and I wouldn’t have put KAHLES on my list. Your test put them on my radar (tracking and FOV huge in my book). Thanks!

Let me repeat, the quality of this work is worth to be published in a book.

Very thorough and informative. Thank you for all your effort,and with similar tests in the future.

I would still choose the USOptics over the rest.

I would love you to evaluate hunting or LRH scopes in the medium range 600-1000 yards and medium price range around $1500. The G7, Huskemaw, Greybull, Vortex, Trijicon, Quigly-Ford, SWFA, Bushnell, Nikon, Burris. Some of these rifle scopes have been videoed many many times making kills in the 600-1,000 arena yet are sneered at by many tactical shooters. There is particular bias vs MOA reticles and turrets. As an ex USAF pilot & AAL Captain I can tell you aviation is a very exacting field and yet it still uses inches, feet, yards and miles. I understand that most enlisted military use mils but a very large market segment was, is and will be more quick in their conceptual understanding and computations without the metric monkey on their back no matter the pure theoretical advantages.

Hey CR Rains, I totally hear you. I actually was a hunter originally, and that is where I developed a love for rifles and the gateway drug that got me into the long-range, precision rifle game. Honestly, I’m not sure I will do any more scope tests. It was a lot of work, especially for the heavy criticism I received throughout the entire process. I know a lot of people loved it and appreciated it, but my in-depth scope testing days may be over. However, I’m hopeful that other guys might use the benchmark I’ve defined here. I already have one company in Europe that contacted me for a few more details because they were planning to run the exact same tests on a few scopes.

On the MIL vs. MOA debate … there certainly isn’t one that is inherently better than another. I wrote a very in-depth post about this debate, which I feel is the most complete and objective content on the differences between the two systems. There are only a few minor differences, which typically get blown WAY out of proportion by supporters of one side or the other. Here is the link to the comparison I’m talking about:

MIL vs MOA: An Objective Comparison

Thanks for the comments!

The hell with those criticisms. They’re probably trying to justify their purchases. They can go to youtube if they want a subjective review of their purchase. Many would appreciate reviews of scopes in the $700-$1500 range since that’s all they may be able to afford, although I would like to see how the Steiner T5xi and new Razor does. Imagine the controversy you would get if a $800 scope mechanically and optically outperformed some of the popular ones on this list. Also, keep in mind that you now have a process and the equipment so the next scope test should move faster. Excellent work Cal.

Thanks, VMan. I’d love to see how the Vortex Razor Gen II stacks up, as well as the Tangent Theta.

I may do the mid-priced scopes at some point. Your correct in saying it’d be less time. I spent a ton of time developing the benchmark tests. I tried to talk to every optic engineering team represented, and asked each of them to review the tests I was planning before I started. Plus I asked other respected industry guys like Frank and BigJimFish of SnipersHide.com, and both of those guys a lot of others helped me tweak the tests to be the most objective as possible. So I wouldn’t have to do all that work again, but it’d still be a significant time investment. I’m still considering it, but haven’t put it on the schedule yet.

Thanks for the comments!

Cal

Aaaah, keystroke error. 😀

Not sure where I left off editing, but none-the-less, awesome job!

Hopefully, whatever I posted isn’t misunderstood. I just wanted to say, I have to reconsider choices after the March fell off the wagon. I’m looking to glass an AR10 SASS.

Will have to re-read…

Can anyone tell me of a review like this including a Vortex razor HDii 4.5 x27 I am looking to make a purchase??

I haven’t seen anyone do a review like this.

do you have plans to do this for scopes under 1500? me and several of my friends would love to see that.

No plans at this point. This test definitely ended up being a ton more work than I expected. I haven’t decided for sure that I wouldn’t ever do a follow-up, but I don’t see it in the near future. I realize that probably isn’t the answer you were hoping for, but I do this all in my spare time … for free. I have a young family and demanding job with a lot of leadership responsibility, so taking on another huge project might not be in the cards right now.

I do know of a couple other people who are planning or even performing some of the same benchmark tests, and my hope is that will catch on. It would be awesome if we had a library of objective scope comparisons like this … that will just take more energy than I can throw at it at this point.

Thanks,

Cal

i understand, and thank you for doing all the work you have already done.