Overall Summary of Optical Performance & Score

I wanted to give a quick summary of the results related to optical performance for those who prefer to just read the “executive summary.” For my more detailed readers, don’t worry … I go through exhaustive detail of each test as well.

There are a few major aspects to optical performance for a long-range rifle scope, and I’ve tried to weight the major elements appropriately for the overall score. The majority of the score is obviously optical clarity, which refers to the image quality you see through the scope. But just because a scope is crystal clear, doesn’t mean it is ideal for long-range shooting. The apparent field of view on these scopes varied significantly, so a very narrow or very wide field of view could impact the overall performance as well. Finally, part of the score is based on zoom ratio, which describes the magnification range of a scope. A 6-24x scope has a zoom ratio of 4 (24 ÷ 6), where a 4-28x scope has a zoom ratio of 7 (28 ÷ 4). All other things being equal, a larger zoom ratio just makes the scope more flexible. I also measured the actual maximum magnification for each scope, and the zoom ratio is based on that measured data (not simply what the manufacturer advertises).

| Weight | |

|---|---|

| Optical Clarity (resolution & contrast) | 70% |

| Field of View (measured at 18x for direct comparability) | 15% |

| Zoom Ratio (range of magnification, based on the measured max zoom) | 15% |

You can see there were 3 rifle scopes that stood out among the crowd when it came to optical performance:

These scopes were stunning in terms of overall image quality. The Zeiss Victory Diavari 6-24×56 took the prize for pure optical clarity (also commonly referred to as image quality), but it also had one of the smallest zoom ratios of the entire group. The Schmidt and Bender PMII 5-25×56 was right behind the Zeiss in terms of image quality, and it also had one of the wider field of views among these rifle scopes. But although the zoom ratio was slightly higher than the Zeiss, it still has a small magnification range relative to many of the newer designs represented here. The Hensoldt ZF 3.5-26×56 was released just a couple months ago, and it represents the latest scope design techniques. It had an enormous zoom ratio, from 3.5x on the low end to 26x on the high end. The image quality of the Hensoldt scope was also top notch.

Note: If you don’t agree with how the scores are weighted, don’t freak out. I provide the detailed results for each area, and you can ignore these overall scores if you’d like or even calculate your own based on different weights. They’re just intended to give a high-level overview of all the findings in this area, and weighted as the typical long-range shooter would likely rank them.

Optical Clarity (Resolution & Contrast)

When I talk about optical clarity, you can think about that as image quality. This is obviously an important feature of a rifle scope, but it’s also extremely complex, highly technical, and often the topic of heated debate. Many point to things like coatings, HD or ED glass, or where the glass is made as indicators of image quality. Here is how optics expert, ILya Koshkin, sees it:

How do you sell good image quality? Every magazine ad for every scope company for a riflescope talks about how well you can see. You pretty much have to tout something: patented coating recipe, extra low-dispersion glass, “high definition” glass, etc. None of these things by themselves are of any importance and (by my estimate) nearly 100% of what you see in a typical advertisement is, at best, misleading and at worst, pure BS. However, all these tricks are necessary for attracting enough attention to a particular product to at least get you to consider it. – ILya Koshkin (from Rifle Scope Fundamentals)

While coatings and glass specs certainly play into image quality, they aren’t what we really care about … at least not directly. So instead of comparing all those aspects, I focused my tests on the end result we actually care about: How much detail can you see through the scope? How well does the scope transmit contrast?

I spent a ton of time trying to come up with a data-driven approach to quantify optical clarity, because these were the only tests I ran that couldn’t be measured directly. By that, I mean this is a subjective matter, and requires a person to sit behind the scope and make judgments on what they can and can’t see. So I was particularly cautious in how I conducted these tests in order to mitigate bias or other outside factors that could skew the results.

Many brilliant people gave me advice on these tests, including professionals whose full-time job is testing optics. What I ended up with was an original, straight-forward approach. I feel it’s as objective as practically possible for an independent tester (without buying $100,000+ in specialized equipment).

The Double-Blind Tests

To start, I conducted double-blind tests to evaluate optical clarity. That means neither the scope testers nor the conductor of the tests could tell which scope was which. I carefully wrapped each scope, to disguise the brand, size, shape, color, turret design, and anything else I could reasonably obscure. To be honest, a couple times, I thought I knew what scope a tester was handling, and I was wrong 3 times out of 3. The scopes were very well disguised! These blind tests were intended to prevent brand bias. I was afraid if someone saw a brand name that they thought should perform well they might subconsciously try harder to fulfill that expectation, or if they saw a brand with a weaker reputation, they might not put in as much effort. By hiding the brand, we helped level the playing field.

Each scope had a unique letter taped to it that was attached to them at random. I recorded the results for each scope by the letter ID, and only matched that up with the actual scope underneath it after all the testing was complete.

I still allowed testers access to the parallax knob (a.k.a. side-focus adjustment), and they were encouraged to use it to adjust each scope to their eyes. They could adjust it by reaching under the v-block, without having to visually see the knob. A few scopes had unique designs for target focus (like a focus ring on the objective bell, or around the elevation turret), and I found a way to allow them to make those adjustments as well.

Testers & Sample Size

I specifically chose a set of six testers, most of whom were “disinterested parties” (i.e. they weren’t in the market for one of these scopes, and may have never seen a tactical reticle or even own a long-range rifle). I obviously wanted “disinterested parties” to help mitigate bias. Testers ranged in age from 30 to 80 years old, and everything in between.

The six person sample size should also help normalize the results, just meaning they couldn’t be heavily skewed by one or two testers. My statistician friend tells me that ideally I’d have a sample size of 30 people, but it’s hard to find 30 “disinterested parties” willing to give up hours to help me with a boring (almost clinical) test … especially free. So a BIG thanks to the six friends who did help!

I also wanted a larger sample size (i.e. six people instead of just one or two), because everyone’s eyes are slightly different. Some people’s eyes may be more sensitive to resolution (ability to see fine detail) or contrast (ability to differentiate between light/dark and colors), which can impact the apparent clarity they experience behind a scope. Here is an excerpt from an excellent article by ILya Koshkin that explains these competing design characteristics:

I have heard people say that resolution and contrast go hand in hand. That is not, strictly speaking, correct. They are in a perpetual match of “tug of war.” It is impossible to optimize both of them to be as high as possible. If resolution is fully optimized, contrast suffers, and vice versa. In an image with high resolution, but low contrast, there may be a lot of fine detail, but you might have a hard time distinguishing between them, since they do not stand out much. Conversely, if an image has high contrast, but low resolution, all the large details will be very distinct (a common term is to say that they “pop” out at you), but small details will simply not be present. While ideally you would want to have an image with both high contrast and high resolution, that is not easy to achieve. For every optical system, the designer has to compromise between resolution and contrast in order to achieve a well-balanced image. – ILya Koshkin

Ultimately, the average performance over a six person sample size should give you a good idea of whether a scope will be more or less likely to be clear and sharp for someone’s eyes. Yes, each person’s eyes are different, but we didn’t see substantial variations between the testers.

About The Test Setup

I first set all the scopes to 18x magnification, which isn’t as easy as it sounds. A few people had mentioned that you can’t trust the marked indexes on the scope, so I wanted to ensure I really was at exactly 18x for each scope. That would allow an apples to apple comparison of optical clarity, instead of comparing the detail you could see with a 30x scope with an 18x scope. I essentially found a reliable way to ensure I had each scope set at exactly 18x, and while it was very time consuming … it was also straight-forward. I explain it in detail in the How To Measure the Apparent Magnification of a Scope post.

I also set the erectors on each scope to be in the middle for the optics tests, meaning they were centered within the body. This should help minimize any optical skew. I accomplished this by simply putting the scope in a V-block and aiming it at a target. I’d then rotate the scope 360 degrees (without moving the V-block), and adjust the elevation and windage knobs towards the target. I knew the scope was centered if I could have the elevation turret pointing up and it was on target, and I could rotate it with the elevation turret to the left, down, or right and it should still be pointing at the target the whole time.

At first, I tried to set up the tests outdoors, but noticed the ambient light had a big impact on what you could see. I might be able to see something easily near sunset, but I couldn’t even get close to seeing the same level of detail midday. My pupils would dilate, and therefore change the amount of light that made it through my eye. My goal was to make all the tests completely repeatable, so I decided to move the optics tests indoors. Luckily, I have access to a 100+ yard long hallway at my church. This allowed me to completely control lighting, and eliminate any possibility for mirage. It was a great optics test environment.

According to the British Standards Institution specifications, when using test charts to determine visual acuity “the luminance of the presentation shall be uniform” (from BS 4274-1:2003). This is why I used a continuous lighting kit, with a photography umbrella to bounce light onto the charts. This prevents harsh, uneven direct light. I turned off the other lights in the area so that there was a single source of controlled, even light.

I essentially set up 9 different charts exactly 100 yards away, and each chart had a couple things on it. The first was a custom Snellen eye exam chart, which is the same type of chart you read when you got the eye doctor. I asked each tester to read the smallest letters they could possibly make out, and I graded their accuracy. Each of the 9 charts had a cryptographically random, non-repeating string of characters. Each string of characters was completely unique, and didn’t match any string on any chart. This ensured testers couldn’t memorize patterns, and were really forced to read each letter through each scope. No chance of cheating … either they could read it or they couldn’t. I followed the British Standards Institution specification for Snellen charts and only used the letters C, D, E, F, H, K, N, P, R, U, V, and Z, based upon equal legibility of the letters (from BS 4274-1:2003).

For scoring, testers were awarded more points for smaller letters. Testers only received points for letters they read correctly. If they read a line correctly, they were also awarded points for all of the lines above it (i.e. the lines with larger letters than the one they read).

The diagram below shows the height of each line, along with the max score awarded for reading the line 100% correctly (6 for 6). The score was calculated based on the relative size of the letters.

So if a tester was able to read line 5 with 100% accuracy, but couldn’t make out line 6, they’d be award the full 83 points for line 5 plus the sum of the points for all lines above that. So the total points awarded would be 83+67+50+33+5, which equals 238. If another tester was able to accurately read 3 of the 6 letters on line 6, he’d be awarded 50% of the max score for that line. The max points for line 6 is 100, so he’d be awarded 50 points, and that would be added to the 238 for lines 1 through 5, for a total score of 288.

The next two elements on the chart are similar to a 1951 US Air Force Resolution Test Chart. The basic idea for this came from the pioneering work FinnAccuracy developed to evaluate optical equipment. However, I made a few modifications to their test based on feedback I received from several optics testers during my peer-review phase. The first column had black lines on a white background, which I refer to as the high contrast set. To the right of it was another column containing dark gray lines on a medium gray background, which I refer to as the low contrast set. Each tester found the smallest set of lines where they could still differentiate between the lines, meaning they weren’t washed out and simply appearing like a gray box. Leupold shared a great illustration that shows what I’m talking about (below). I showed each tester this illustration to help them understand what they were looking for. I recorded the number each tester identified for the set of high contrast lines they could still resolve, as well as the number for the low contrast set of lines.

I also completely randomized the order of the scopes presented to each tester, so testers weren’t always looking through the same scopes first. I also asked testers to take a break after they’d been looking through scopes for a few minutes. Your eyes can start to fatigue after about 15 minutes (depending on age and eyes), so we tried to prevent someone straining to look through scopes beyond that.

Wow, that’s a lot of information! But I know there are some detail guys out there that would question all this stuff the minute I published it, so I just want to try to be completely transparent about where all this data came from.

The Optical Clarity Results

Finally, ready to see the data? Here are the results of the eye exam chart, averaged over all the testers.

The Zeiss Victory FL Diavari 6–24×56 was the clear winner for the Snellen eye charts (pardon the pun). The Schmidt and Bender PMII 5-25×56 was not far behind, and the Hensoldt ZF 3.5-26×56 performed outstanding as well. One surprise was how many Nightforce scopes ended up in the top half of this test. In fact, there were 2 within the top 5 … including the Nightforce NXS 5.5-22×50, which had been riding on my magnum hunting/target rifle for almost 2 years in rough conditions.

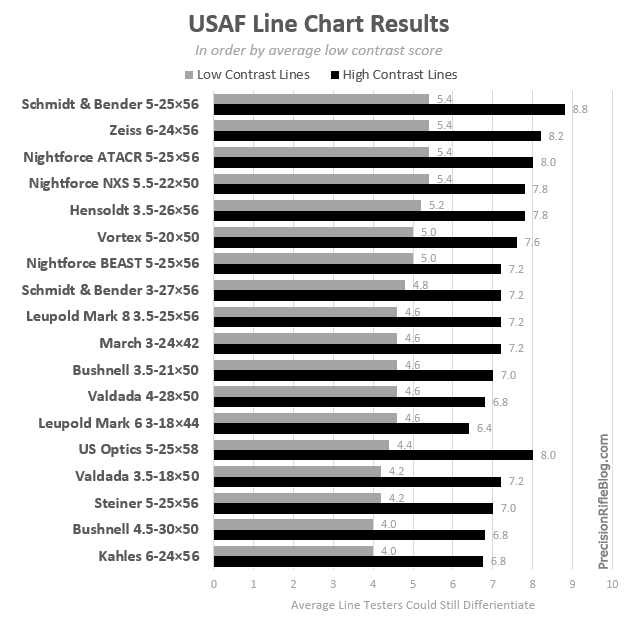

Here are the results from the USAF line charts. The scopes are ordered by the score for the low contrast set.

The top performers with the USAF Line Charts were similar to the Snellen eye exam charts, especially when you just look at the results for the high contrast set. I fully expected to see this type of correlation, which provides some confirmation for the validity of these tests.

But there was a slightly different mix when the chart is ordered by the low contrast performance, which is what you see above. For example, testers were able to really pick up contrast with the Vortex Razor HD 5-20×50 scope, although it didn’t perform as well in the resolution test. There were a few other scopes showing this pattern. These scope designs may lean more towards contrast than resolution.

On the other hand, there were some scopes like the US Optics ER25 5-25×58 that showed the opposite behavior, performing well on the other tests and well on the high contrast set of line, but not performing well on the low contrast lines.

Contrast is especially important in low light conditions, so hunters or long-range shooters that find themselves in low light conditions frequently may want to pay special attention to these results. Although I didn’t specifically test in low light conditions, the results of the low contrast test should be indicative of the performance you can reasonable expect in that scenario.

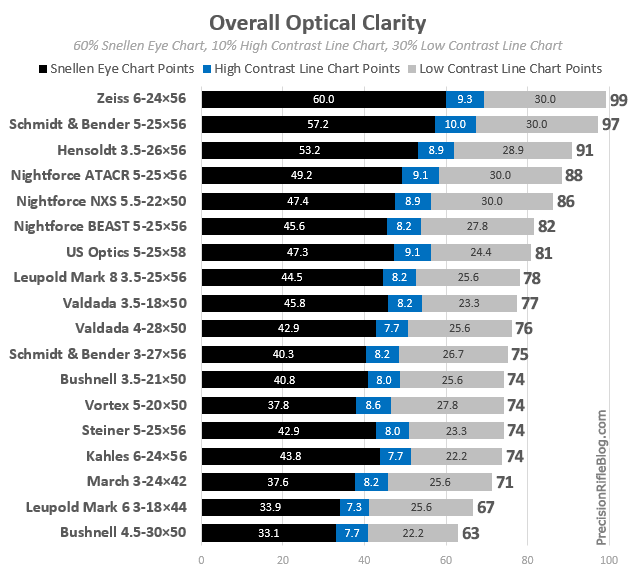

Combined Optical Clarity Score

I know this is a lot to take in. Since these tests were all focused around resolution and contrast, I combined them into a single score for “optical clarity.” This essentially represents the overall image quality of the scope. I thought the Snellen tests were the most reliable, since they had a natural check built in that ensure the tester really can see what they are saying they can. The Snellen chart is focused primarily on resolution, so I hoped to infer contrast more from the USAF line charts. That is why I weighted the score from the low contrast USAF line chart a little higher. Here are the weights I used:

| Elements to Optical Clarity Score | Weights |

|---|---|

| Snellen Eye Chart | 60% |

| High Contrast USAF Line Chart | 10% |

| Low Contrast USAF Line Chart | 30% |

To calculate the combined score, the results from each test were normalized to a 100 point scale. Then I combined those according to the weights above. Here are the combined results, which reflect the average overall image quality the six testers found for each scope.

You can see the Zeiss Victory FL Diavari 6-24×56 ended up on top. I remember the moment when I first took the Zeiss scope out of the box and looked through it. While it’s hard to know how good a scope is just glancing through it, I could immediately see it was going to be a competitor. And it turned out to be on top out of this long list of capable scopes. But the Schmidt and Bender PMII 5-25×56 wasn’t far behind it. After all the results were added up, those two scopes stood out in terms of optical clarity.

One thing to keep in mind is that scopes with a smaller objective lens diameter should theoretically have lower resolution than scopes with larger objectives (all other things being equal). According to Nikon, “Given the same magnification, the larger the objective diameter, the greater the light-collecting power. This results in higher resolution and a brighter image.” So the scopes with less than a 56mm objective that finished well deserve a tip of the hat, including the Nightforce NXS 5.5-22×50, Valdada IOR 3.5-18×50, Valdada IOR RECON 4-28×50, Bushnell Elite Tactical 3.5-21×50, and Vortex Razor HD 5-20×50. And tactical scopes that have a much smaller objective like the March 3-24×42 FFP and the Leupold Mark 6 3-18×44 may simply have had too much of a handicap to overcome when compared to these larger scopes.

I was surprised to see the new Schmidt and Bender 3-27×56 High Power scope finish so low. I had actually cast my vote for that scope to end up on top overall, but it had disappointing performance here. They may still have a few kinks to work out in this new design, or maybe I just got a bad unit. I actually tried to contact S&B a couple times through this test, with no response. This was one of the brand new scopes that the guys at EuroOptic.com let me borrow for the tests, and since it has a $7,000 price tag … I didn’t feel comfortable asking them for another one to double-check. The optical system for the 3-27 is a more complex design that likely has more lenses than the 5-25, which could contribute to its performance.

I was also surprised by how many Nightforce scopes were represented in the top few spots. 50% of the top 6 scopes were Nightforce. Personally, when I think of Nightforce, I don’t think best of class optical clarity … I think durability or maybe even repeatability, but not image quality. Don’t get me wrong, I feel like Nightforce makes a great scope … I personally own one and paid retail for it. However, this is exactly why I’m so drawn to an objective, data-driven approach. It is hard for us to look through scopes, even if they’re side-by-side, and be able to rank clarity … and when it involves this many, its impossible. The human brain just isn’t made for that, and our short term memory simply can’t hold on to all the information we need for a valid comparison. Here is an excerpt published by the Vanderbilt Vision Research Center on this exact topic:

At any instant, our visual system allows us to perceive a rich and detailed visual world. Yet our internal, explicit representation of this visual world is extremely sparse: we can only hold in mind a minute fraction of the visual scene. These mental representations are stored in visual short-term memory (VSTM). Even though VSTM is essential for the execution of a wide array of perceptual and cognitive functions, and is supported by an extensive network of brain regions, its storage capacity is severely limited.

My point of bringing that up is that maybe we trust our brains too much when making visual comparisons. Even with simple information (not even a full visual scene), studies have shown that we can only store around 5-7 things in our short-term memory at one time. But, the testing procedures I used here help us overcome the limits of visual short-term memory by capturing what testers saw in the moment, and storing that outside of their head for later comparison. That’s at least why I personally trust these results more than my personal experience. I also recognize that all humans are biased … including me. So an objective, double-blind test can sometimes reveal hard truths that our bias might make it hard for us to see otherwise.

Other Post in this Series

This is just one of a whole series of posts related to this high-end tactical scope field test. Here are links to the others:

- Field Test Overview & Rifle Scope Line-Up Overview of how I came up with the tests, what scopes were included, and where each scope came from.

- Optical Performance Results

- Summary & Part 1: Provides summary and overall score for optical performance. Explain optical clarity was measured (i.e. image quality), and provides detailed results for those tests.

- Part 2: Covers detailed results for measured field of view, max magnification, and zoom ratio.

- Ergonomics & Experience Behind the Scope

- Part 1: Side-by-side comparisons on topics like weight, size, eye relief, and how easy turrets are to use and read

- Part 2 & Part 3: Goes through each scope highlighting the unique features, provides a demo video from the shooter’s perspective, and includes a photo gallery with shots from every angle.

- Summary: Provides overall scores related to ergonomics and explains what those are based on.

- Advanced Features

- Reticles: See every tactical reticle offered on each scope.

- Misc Features: Covers features like illumination, focal plane, zero stop, locking turrets, MTC, mil-spec anodozing, one-piece tubes

- Warranty & Where They’re Made: Shows where each scope is made, and covers the details of the warranty terms and where the work is performed.

- Summary: Overall scores related to advanced features and how those were calculated.

- Mechanical Performance

- Summary & Overall Scores: Provides summary and overall score for entire field test.

PrecisionRifleBlog.com A DATA-DRIVEN Approach to Precision Rifles, Optics & Gear

PrecisionRifleBlog.com A DATA-DRIVEN Approach to Precision Rifles, Optics & Gear

I appreciate all of this information, and I’m looking forward to your future reports. Thank you for your investment.

Hi Cal,

Wonderful and sorely needed information on an aspect of shooting sports that is the most complicated yet arguably important component of the complete rifle. I myself asked the manufacturers if they published white papers of their products and received no response from any of the manufacturers.

Lastly, high resolution is much more expensive to provide than bright contrasty light gathering ability as the former requires high quality lenses and the latter can be provided by larger objectives.

Many thanks for what was a very time consuming but vastly important topic!

Bruce

Thanks, Bruce. Yeah, I wish the manufacturers would publish white papers like the ones that camera lens companies publish that includes things like a Modulation Transfer Function (MTF) chart. They won’t do it until enough customers demand it, so I’m glad you asked for more details from them … but I’m not surprised you didn’t get a response. Honestly, I’m sure I wouldn’t get a response from these guys if I didn’t have this platform. In fact, some of the companies wouldn’t respond until after I started publishing results … then they were “ready and willing to help me anyway they could.” 😉 In fact, I never got a response from Schmidt and Bender’s corporate office after multiple attempts. I eventually had someone forward me contact info for a US-based Schmidt and Bender rep after I’d published the dismal results for that scope, and I reached out to him. Some of these companies are far more responsive to customers than others, just like in most industries.

Glad you found this info helpful. I’m certainly proud of it. I put a ton of effort into trying to make this as objective and useful as possible, so I appreciate the encouragement.

Thanks,

Cal

Wow! What a huge amount of work that you put into this project. One test is worth a 1000 expert opinions and you did an outstanding job! Thanks for taking the time to do such an exhaustive test. I really appreciate the objective nature of the test along with complete data disclosure. This will allow me to make a purchasing decision based on the characteristics that matter most to my particular situation.

Yes sir, I appreciate the kind words. It was a MASSIVE amount of work. Glad you find it helpful. There is a lot more to come over the next couple weeks.

Thanks so much for your time and effort on this mate, really appreciate you doing such a thorough job. Very much looking forward to the rest of the results.

Hi.

Great test you are doing.

I do have a questions regarding the optical performance test.

How will you performe this test when testing scopes with less then 18 magnification?

Once again, great test.

Martin

Well, I may not test scopes that don’t have at least 18x. At least I don’t plan on doing that at this point. I’m focused on long-range, precision rifles and 18x seems like a good place to start. I know there are guys out there using 10x fixed powered scopes, but if you look at what the best long-range, precision shooters in the world are using … it’s not a 10x fixed scope. 90+% are using variable powered scopes with at least 18x on the high end, so that’s where I’m focused.

Ok.

I was just wondering what would happen if you tested for example a 10x scope. Would you place the target at 55.5 yards and do the same test.

Would the results be directly comparable to the tests you did now on 100 yards at 18x or would it be incorrect to do it like that?

I don’t have the answers myself so I don’t mean to criticise, just interested in these things.

Martin

Yep, no problem. I appreciate the comments. I don’t think it’d be directly applicable if you modified the test. If I tested lower powered scopes they’d just have to fall in their own class, and not be comparable to these original higher powered scopes. But that seems reasonable. I read an optics test article in Outdoor Life last month, and they compared a $2000 Leupold 7-42×56 with a $400 Nikon 3.5-14×40, and a $250 Weaver 1-4×24. Should those really be in the same comparison? Is someone really in the market for all of those scopes?

Anyway, that’s why I tried to be very specific about the scopes I chose and the guidelines of at least 6x on the low end and 18x on the high end. I wanted to avoid the scattered, random comparison that I see a lot of people do.

Thanks again for the comments.

Cal, both clayton and I found this to be very informative and are amazed at the level of detail you went to to put this on. Good job man, we are excited to see the rest of the results.

Jonathan

Hey, as a fellow engineer I am really impressed with the diligence you are putting into this effort (shoot{pardon the pun} but all you need are some references and footnotes and you have a(nother) Thesis). Howevvvvvver, please hurry because this money is burning a hole in my gun safe and I swore I wouldn’t spend premium money on a scope until your results were in and I played with the top three!

i have never read a more thorough write up on a particular aspect of precision shooting in my life. This must have taken an inordinate amount of planning and co-ordination to get off the ground. If only these scopes were readily available in Namibia where I live.

Thanks Charl. To be honest, it did take a ton of planning and coordination. I’m glad you found it helpful.

I find it hard to believe that the S&B 3-27×56 finished so low. It was made for us socom. It was tested under rigorous test and passed. I have the 3-27×56 and the 5-25×56 and the 3-27×56 is better in every way better glass and no tunneling. I shoot every weekend and compare along with the the NF atacr. The sb 3-27 x56 wins hands down. I think you got a faulty demo and should retest.

Very disappointing results for what outwardly appears to be a top scope. Was going to buy one but won’t touch one now unless they can be shown to be improved! Zoom range is a concern, as is the clarity issue. No explanation from S&B?

Yes, sir … it was disappointing. I did a survey before I started the tests to see what my readers thought would end up on top. I actually voted for the S&B 3-27×56 myself, because that is what I expected to perform the best. I’d obviously heard about the legendary performance of the 5-25×56 and assumed the newer scope from the same company that was almost twice as expensive would perform even better. I was as shocked as anyone. And it wasn’t just one issue … it wasn’t as stellar as I was hoping at any of the categories.

I explained Schmidt and Bender’s response to this in-depth in a comment on the summary post. Here is a link to that:

http://precisionrifleblog.com/2014/09/19/tactical-scopes-field-test-results-summary/#comment-1157

I haven’t heard anything from them since that time, so I’m not sure if this is something they’ve addressed in design or quality assurance at the factory. I’m with you … I won’t touch one unless they can be shown to be improved! They found at least one defect that they told me about, and it seems like one that should have been caught before it left the factory … especially at that price point.

With all that said, Schmidt and Bender didn’t build their well-respected brand on performance like that. So I have confidence that the Germans will get it figured out, and it may end up being the stellar product we were hoping for. It’s just a lot of money to spend for being hopeful.

Thanks,

Cal

Really jaw-droppig test by any means. Personally I just can’t say enough thanks for your hard work.

Just one question bothers me. When testing optical clarity, what was your approach to diopter correction mechanism? From my experience, it matters a lot. Seems like I cannot find any mention of it anywhere in text. Thanks!

Hey, that’s a great question. I tried to be super-thorough in explaining everything, and must have just overlooked that detail. I essentially followed Todd Hodnett’s instructions that were published in an issue of SNIPER magazine a few months ago. Todd wrote an article that covered a few misconceptions related to scopes, and this was a specific topic he touched on. He said “The ocular adjustment is used to make the reticle crisp. Then we adjust the side parallax, or what I call the target focus.” I scanned in the ocular adjustment he is referring from the article, and it is shown below. This is the same thing a lot of people refer to as diopter adjustment.

So I spent time behind each scope before I setup for the optical tests adjusting each one so that it had as crisp of a reticle as possible. Then when I set them up for the tests, I preset the target focus (although each tester was encouraged to adjust the target focus on each scope for their own eyes). In keeping with the blind tests, they couldn’t actually see the target focus knob, but they could reach under the v-block and adjust it. This also helped ensure they weren’t using any distance index marks on the knobs to “ballpark” where the focus should be. Those are notoriously bad for being out of calibration, and they were for some of these. Great question!

Thanks,

Cal

I bought a Bushnell ERS 3.5 -21 X 50 with a Horus H59 reticle. It was the best I could afford.

These test results show that I made the best choice given my budget. My experience has borne out that this is a very good scope that could use better glass. Love the H59 reticle.

IMHO the best glass being made today is made in Japan, not the German SCHOTT types. These tests do not show that because the Japanese made scopes like Bushnell do not specify that “ultra glass”.

Eric, you’re absolutely right. The Bushnell 3.5-21×50 Elite Tactical scope proved that it was an EXTREME value. I’ve told a few people that if you’re budget won’t allow you to go beyond $1500, or if you just don’t plan to shoot every month … the Bushnell scope is probably the way to go.

I believe the Nightforce scopes also source their glass from Japan, and it was really great as well. I’ve heard most scope companies use the same supplier in Japan, but simply specify the tolerances they’re willing to pay for. The same glass company might be the OEM supplier for lenses for a $100 scope and a $3000 scope. The only difference is how tight of tolerances the company buying the lenses specified. Tighter tolerances always equate to more money.

I’m going to have to disagree about the Japanese glass being better than the German glass. I think this test actually proved that the German glass was better. The #1, #2, and #3 scopes in terms of optical clarity were all European scopes, not Japanese. But once again, a lot of that might come down to the tolerances those companies specify and not the capabilities or quality control of the glass manufacturer itself. One thing is for sure, among the scopes I tested … Zeiss and the Schmidt and Bender PMII 5-25×56 were in a class of their own when it comes to optical quality. They were stunning, and it was obvious with them side-by-side.

Thanks for the feedback! Glad you’re happy with your decision. I’ve done two field tests now (the other was a rangefinder binocular field test), and in both cases Bushnell has clearly proven themselves to be the biggest value.

Thanks,

Cal

Now I need to find the best “unimount” one piece ring setup.

So many questions people ask don’t have a clear-cut answer … but you’re in luck, because this one does. The short answer: It’s the Spuhr mount. If you want a more elaborate answer, check out the post I just published on what scope mounts the top 50 shooters in the Precision Rifle Series are using. You can find it at:

Scope Mounts – What The Pros Use.

Wonderful reviews. Thanks so much for publicising your results. I was most disappointed to read the new S&B 3 – 27 x 56 had optical defect and zoom ratio far from that advertised. Did S&B ever comment on the defects or errors, aside from saying they found a reason the clarity was not what it should have been. Very disappointing in a $6000+ scope, I WAS considering buying one but unless S&B can guarantee these issues have been resolved and were a 1 off, they have no chance, will be a 5 – 25 PM II or a BEAST instead. Thanks again.

I explained Schmidt and Bender’s response to this in-depth in a comment on the summary post, and that is the last I’ve heard from them. Here is a link to that:

http://precisionrifleblog.com/2014/09/19/tactical-scopes-field-test-results-summary/#comment-1157

So I’m not sure they’ve made any changes to their design or to their QA process on the Schmidt and Bender PMII 3-27×56. I’d expect them to perfect the design over time, but I’m not sure where they are on that.

Thanks,

Cal

Cal, I’m curious how the optical quality tests would have turned out if you tested for veiling flare. I have a Razor Gen II that exhibits this behavior at high magnification even when it’s cloudy/overcast with the sunshade on. Viewing a target in the shadows (125 yrds) regardless of the time of day will result to some degree a low contrast image or like looking through mild haze. It is much worse w/o the sunshade. It’s very disappointing since my scope has great turrets and reticle and has very good reviews.

Would your contrast tests be an indicator as to which scope would be susceptible to this effect?

Wow, Scott. Sorry to hear that. I’m not sure if the contrasts tests would have captured that or not. I guess if it happens even when it’s overcast, it’s plausible they might have. I still haven’t used a Razor Gen II much personally, although I see a ton of guys running them. I wouldn’t be surprised if they were the most popular scope in the PRS now. Like you said, lots of people love the turrets and Vortex has a good selection of reticles to pick from … but you might have found one of the weak points of that design. No design is perfect, but that sounds like a pretty distracting one. Thanks for sharing, and sorry I couldn’t be more help.

Thanks,

Cal

Which generation Kahles did you test? I’m curious how the new Gen 3 would have performed in the optical test.

Sorry, Scott. I can’t remember what model that was. It might have been Gen 2. I’ve talked to the US Kahles distributor several times, and it sounds like Kahles is continually tweaking and improving the design. He was really excited about the last changes they made. He thought it made a measurable difference. I’ve yet to see it though.

I do REALLY like all the new reticles they released this year. Those are pretty killer. Could be the best reticles in the industry.

Thanks,

Cal

I ordered the Kahles Gen 3 with SKMR reticle. I’ll see how the optics are next week.

I love the SKMR reticle. About a year ago I drew up what I thought was the ideal reticle, and this year at SHOT Show Jeff Huber wanted to show me the new line of Kahles reticles … and when I lifted that one up to my eye, I laughed out loud. It is very, very similar to the design I had drawn up. Don’t get me wrong, I’m not saying they copied me. I never published my design. I think that one was designed by Shannon Kay (owns K&M training facility) and KMW … those people are WAY smarter than I am on this stuff! Little trivia for you: SKMR actually stands for Shannon Kay Mill Reticle. I’m just saying that it really aligns with what I think are the ideal features in a reticle. I REALLY like the floating open circle for the aiming point. I REALLY like the 0.2 mil windage marks. I really like how they used hash marks through the line, above the line, and below the line to differentiate. I’m professionally trained at presenting data in a way that speaks clearly, and they did a phenomenal job of that with that reticle. It’s well done. Both the SKMR1 and SKMR2 reticles are outstanding. Like I said, I think they may be the best reticles in the industry. Hope you love it!

Thanks,

Cal

Just wondering if you have had a chance to review or use one of the Sightron scopes. The above testing is very eye opening and brings clarity (just had to, sorry 😉 ) to a subject that is generally obscured by a bunch of voodoo.

Thanks, Eric. The is the only time I’ve ever seen an approach like this used, and it seems to have merit and provide insight to me. I just like to have results from an objective approach, and it’s hard to have a vision for how to do that with something as complex as optics. And through all this process, I talked to a lot of industry experts … and optics are COMPLEX. I have a whole new respect for guys in this area. Of course there is a lot of misinformation on this topic out there too. Maybe more than it’s fair share!

Unfortunately, I haven’t ever even held a Sightron scope. But I’ve heard a lot of guys talk about them. Sorry I couldn’t be more help.

Thanks,

Cal